You fire off a survey to gauge customer satisfaction. Days pass. Responses arrive. But when you dig in, the results clash with what you hear in calls. Frustrating, right? These mismatches stem from sneaky survey errors.

They distort your data and lead you astray. Spot them early, and you reclaim control. This guide breaks down the top errors, shows why they bite, and hands you fixes. Walk away with surveys that deliver truth, not noise.

Table of Contents

Spot the Sneaky Errors in Your Surveys

Surveys seem straightforward. Ask questions, collect answers, analyze. Yet errors creep in at every step. They range from sloppy wording to flawed reach. Ignore them, and your insights crumble.

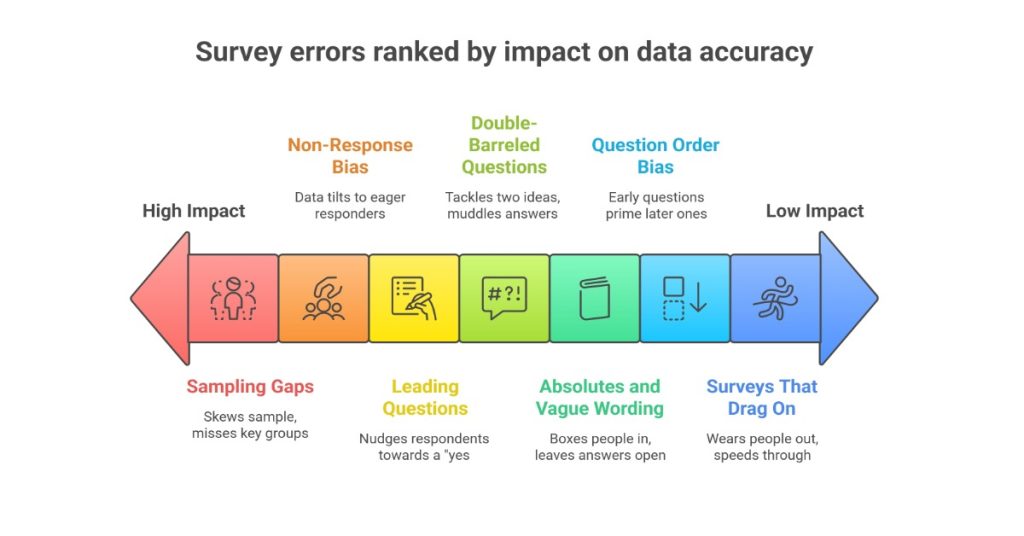

Here are seven common culprits. Each packs a punch on your data’s worth.

1. Leading or Loaded Questions

You nudge respondents toward a “yes” without meaning to. Words like “obviously” or “don’t you agree” tilt the scale.

Example: “How much do you love our speedy delivery?” Pushes folks to praise, even if they wait weeks.

2. Double-Barreled Questions

One question tackle two ideas. Respondents can’t split their thoughts. You end up with muddled answers.

Example: “Rate the comfort and price of our chairs.” Comfort shines, but price stinks? Tough call.

3. Absolutes and Vague Wording

Words like “always” or “never” box people in. Or you skip details, leaving answers wide open.

Example: “Do you ever use our app?” Forces yes/no on sporadic users. Better: “How often did you use our app last month?”

4. Surveys That Drag On

Too many questions wear people out. They quit halfway or speed through without thinking.

Example: A 50-question beast on product feedback. Most bail after 10.

5. Question Order Bias

Early questions prime later ones. A tough topic up top sours the mood.

Example: Start with complaints, then ask about loyalty. Responses skew negative.

6. Sampling and Coverage Gaps

You miss key groups or pick from a skewed pool. Your sample doesn’t mirror reality.

Example: Email surveys to a tech-savvy list. Older customers stay silent.

7. Non-Response Bias

Busy folks ignore you. Your data tilts toward the eager responders who often differ from the rest.

Example: Night owls skip morning links. You overcount early birds.

These errors don’t announce themselves. They hide in plain sight, eroding trust in your findings.

Why Survey Errors Derail Your Decisions?

You build surveys for insights. Clear data sparks smart moves like tweaking products or targeting ads. Errors flip that script. They breed bad calls that cost time and cash.

Take response rates. The average online survey pulls just 44.1% participation. Low turnout means non-responders shape your blind spots. Their silence amps up bias. One study on federal surveys notes falling rates threaten core metrics like employment figures. Your customer pulse? Same risk.

Length hits hard too. Long survey tank completion. In one test across 2,228 invites, a 72-question version scored 51% response and 37% full completion. Trim to 13 questions? Jump to 64% response and 63% completion. Shorter ones keep reliability high Cronbach’s alpha stayed above 0.81 across lengths. But dropouts inflate errors. Standard margins swell, confidence dips.

Biased questions compound it. Leading ones can shift answers by 10-20% in polls. Imagine basing a $50K campaign on that skew. You chase ghosts.

Real sting? Wasted effort. You act on flawed data. Launch a “hit” feature nobody wants. Lose loyal users. Or overlook pain points, handing rivals an edge.

You deserve better. Clean surveys cut these risks. They yield data you trust fuel for growth.

Fix These Errors: Step-by-Step Actions

Knowledge alone won’t save your next survey. You need moves. Start with your draft. Scrub it clean. Here’s how to tackle each error, one at a time.

Neutralize Leading and Loaded Questions

Rewrite with balance. Strip emotional hooks. Test on a friend do they feel pushed?

Swap “How great is our support?” for “How would you rate our support?”

Add neutral options: Strongly agree to strongly disagree.

Pretest: Run five sample responses. Check for even spread.

Split Double-Barreled Traps

Hunt for “and” or “or” in questions. Break them apart. Each gets its own spot.

Bad: “How’s the taste and texture?”

Good: Two sliders, one per trait.

Pro tip: Limit to 10-15 questions total. Keeps focus sharp.

Ditch Absolutes and Sharpen Clarity

Scan for extremes. Offer scales instead. Define terms upfront.

Change “Always satisfied?” to a 1-5 frequency scale.

Add examples: “Tablet PC (like iPad).”

Read aloud: Does it flow like chat? Cut fluff.

Shorten for Stamina

Cap at 5-10 minutes. Aim under 15 questions. Track time in tests.

Prioritize: What must you know? Cut the rest.

Use progress bars. Show “3 of 10 done.”

Offer incentives: $5 gift card boosts completion by 20% in trials.

Balance Question Flow

Group by theme. Start easy, build to deep. Randomize non-essentials.

Open with wins: “What worked well?”

Save sensitive for mid: Build rapport first.

Test order: A/B two versions. See shifts in key metrics.

Widen Your Reach

Define your audience tight. Use diverse channels. Quotas ensure a mix.

Mix email, social, SMS. Hit all ages.

Probability samples for stakes high: Random from full list.

Track demographics: Adjust if gaps show.

Boost Responses, Cut Bias

Make it easy. Follow up once. Sweeten the pot.

Time right: Mid-week, mornings.

Remind: “You got this takes 4 minutes.”

Analyze dropouts: Who bails? Target them next round.

Apply these, and errors drop. Your data sharpens. Decisions stick.

Real Results: A Team’s Survey Overhaul

Numbers prove it. Take a research registry’s pivot on participant feedback surveys. They ran three versions: ultrashort (13 questions), short (25), and long (72). Goal? Gauge perceptions on research involvement.

The long one flopped. 51% responded, but only 37% finished. Data scattered high dropouts meant skewed views from patient finishers. Reliability held (alpha 0.87), but insights blurred. Median time: 10 minutes. Burnout clear.

They slashed to ultrashort. Response hit 64%. Completions soared to 63%. Time? Two minutes flat. Reliability? Solid at 0.81 alpha. Test-retest agreement matched the long version (kappa 0.81). Key outcomes like overall ratings aligned across lengths. No quality loss.

Bonus: Compensation layered on. It nudged completions another 10-15% in uncompensated runs. The team now uses the short form standard. They cut non-response bias, saved hours on analysis, and trust their pulse on participant needs. One tweak, big lift.

Your surveys? Same potential. Start small. Measure change.

Your Go-To Checklist for Bulletproof Surveys

You read this far. Time to act. Print this. Pin it by your desk. Run every draft through it.

- Wording Check: Neutral? One idea per question? Clear terms?

- Length Audit: Under 10 minutes? Progress shown?

- Flow Test: Logical order? Easy start?

- Reach Scan: Full audience covered? Quotas set?

- Response Plan: Incentives? Follow-ups? Dropout tracking?

- Pretest Power: Five testers. Fix flags. Soft-launch 10%.

- Ethics Edge: Opt-outs easy? Honest skips allowed?

Tick these, and you sidestep 80% of pitfalls. Launch confidently.

Read More

Why Survey Data Security Should Be Your Top Priority?

AI Tools for Analyzing Customer Reviews: The 2025 Buyer’s Guide

Beyond the Survey: 7 Questions That Uncover What Customers Really Think

Wrap It Up and Launch Better

Survey errors steal your edge. They muddle messages and miss marks. But you hold the fix. Spot the seven big ones. Apply the steps. Use that checklist. Your next round? Clean data that guides you right.

Grab your draft. Edit now. Watch responses flow true. What changes first? Hit reply share your win.